Optimizing Event Processing at Twitter: A Deep Dive

Written on

Introduction to Twitter's Event Processing

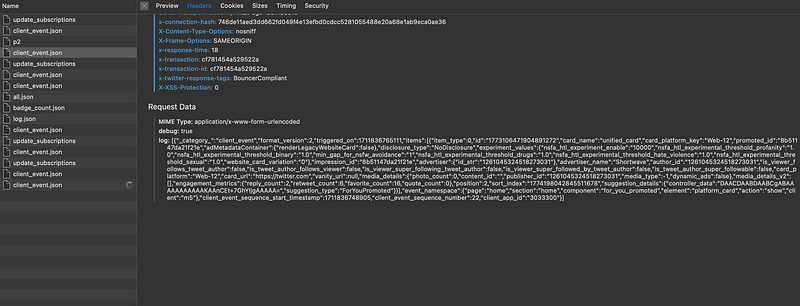

Twitter manages around 400 billion events in real time, generating an impressive petabyte of data daily. Various sources contribute to this data, including distributed databases, Kafka, and Twitter's own event buses.

In this article, we will explore:

- The previous methods Twitter employed for event processing and their shortcomings.

- The business and user challenges that led to a transition to a new architecture.

- The details of the new architecture.

- A performance comparison between the old and new systems.

Internal Tools for Event Processing

Twitter utilizes several internal tools to process events effectively:

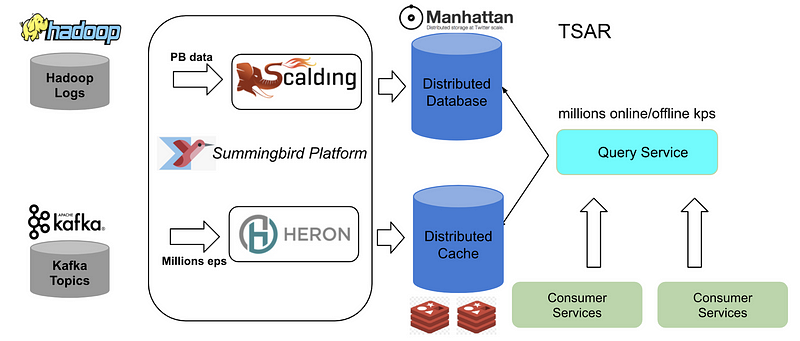

- Scalding: A Scala library designed for simplifying the specification of Hadoop MapReduce jobs. Built on Cascading, it abstracts complex Hadoop details, allowing for seamless integration with Scala.

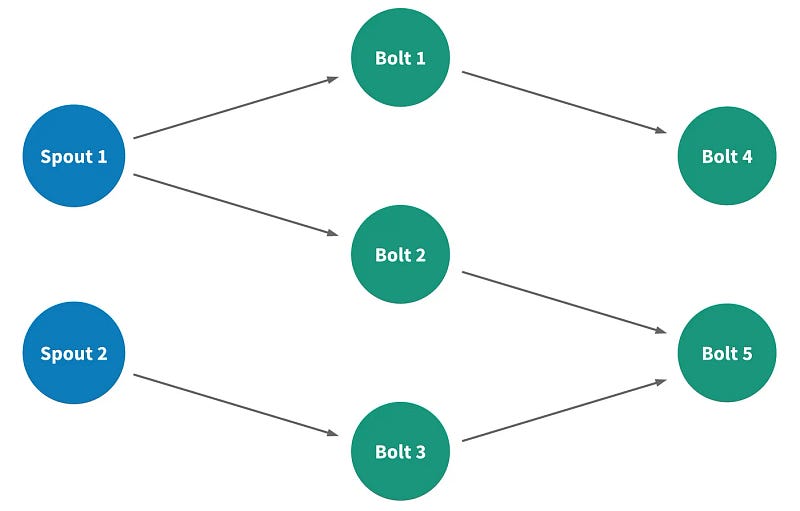

- Heron: This is Twitter's streaming engine, developed to manage petabytes of data, enhance developer efficiency, and ease debugging processes. In Heron, a streaming application is termed a topology, which consists of nodes (data-computing elements) and edges (data streams).

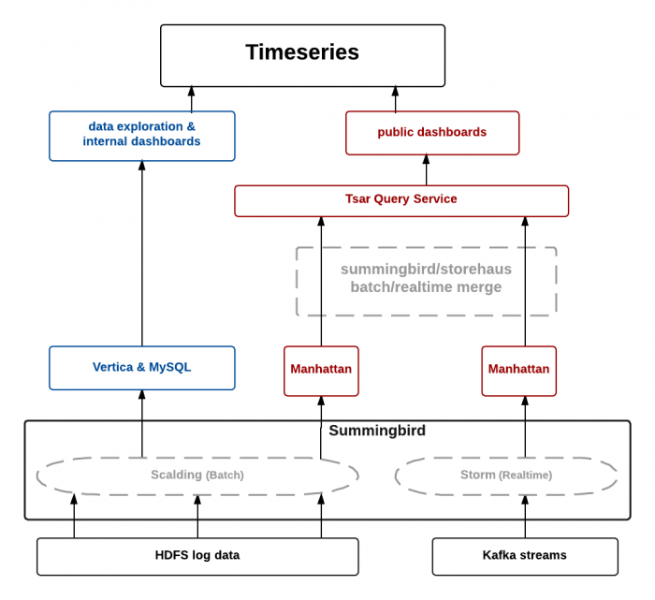

- TimeSeriesAggregator (TSAR): A robust framework designed for real-time event time series aggregation, primarily for monitoring user interactions with tweets across various dimensions.

Before we delve into the evolution of Twitter's event processing system, let’s briefly examine these internal tools.

Understanding Twitter's Event System

All Twitter features rely on microservices spread across the globe, generating events sent to an aggregation layer built on an open-source project by Meta. This layer groups events, executes aggregation jobs, and stores data in HDFS. The events then undergo processing, including format conversion and data recompression.

Old Architecture: A Closer Look

Twitter's previous architecture was based on the lambda model, consisting of three layers: batch, speed, and serving. The batch layer processed client logs stored in HDFS, while the real-time data came from Kafka topics. Data was processed and stored in distributed systems like Manhattan and Nighthawk.

Challenges in the Old System

This architecture posed challenges when real-time events surged, such as during significant events like the FIFA World Cup. In such cases, the system experienced backpressure, leading to delays and potential data loss.

For instance, if the Heron containers were restarted to alleviate pressure, it might result in lost events, causing inaccuracies in aggregated data. Similarly, batch processing could introduce delays, resulting in outdated metrics for clients.

The implications of these challenges were significant:

- Twitter's ad services, a key revenue source, suffered from inaccuracies.

- Data products providing insights on engagement were negatively impacted.

- The lag time for event processing could hinder timely user interactions.

New Architecture: A Paradigm Shift

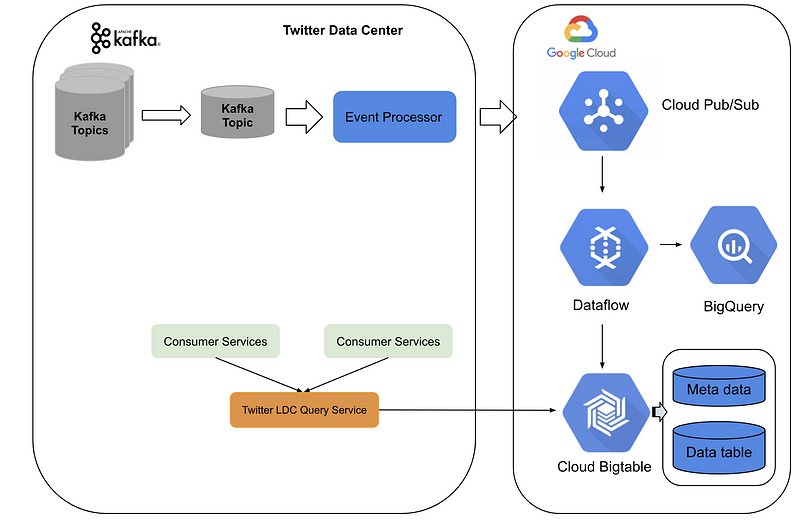

Twitter's new architecture integrates its data center services with the Google Cloud Platform. By converting Kafka topics to Pub/Sub topics and utilizing Google Cloud's streaming data flow jobs, Twitter can now perform real-time aggregations and store data in BigTable.

This hybrid architecture allows Twitter to process millions of events per second with a low latency of around 10 ms, significantly enhancing operational efficiency while reducing costs associated with batch processing.

Performance Comparison

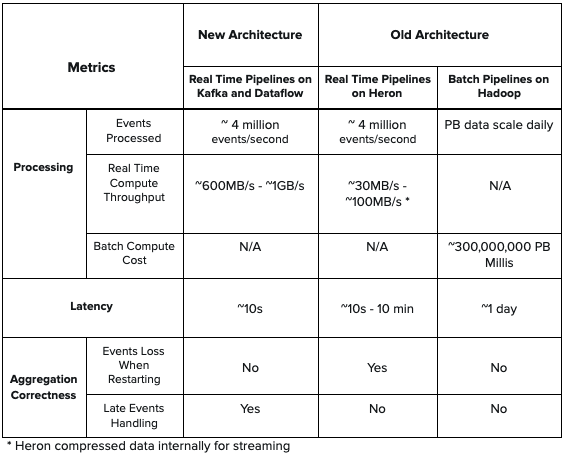

The new architecture demonstrates superior performance, with lower latency and higher throughput compared to the previous Heron topology. It effectively handles late event counting without loss, simplifying design and minimizing compute costs.

Conclusion: Achievements through Transition

By transitioning from the old TSAR architecture to a hybrid model utilizing both Twitter's data centers and Google Cloud, Twitter can efficiently process billions of events in real-time, achieving low latency, high accuracy, and operational simplicity.

The first video discusses the challenges of managing billions of events per minute, showcasing the technical hurdles Twitter faced.

The second video examines whether Twitter's treatment of its software developers is fair, shedding light on the company’s internal culture.

Author: Mayank Sharma

I am Mayank, a technology enthusiast who shares insights on topics I learn about. For more detailed explorations, visit my blog or support my work.

Website: imayanks.com