Bringing Together BigQuery and Apache Spark for Enhanced Data Analysis

Written on

Chapter 1: Introduction to the Integration

For many, the long-awaited integration of Google BigQuery with Apache Spark has finally arrived. This new feature allows users to execute stored procedures in Google BigQuery SQL that utilize Apache Spark.

Spark is designed to execute data queries on extensive datasets from various sources, offering high-speed performance through its distributed architecture and cluster computing capabilities. Google has now announced that users can create and execute Apache Spark stored procedures written in Python within BigQuery. This process is as straightforward as running SQL stored procedures, allowing for a seamless experience. A stored procedure in BigQuery is essentially a series of statements callable from other queries or stored procedures, capable of accepting input parameters and returning output values.

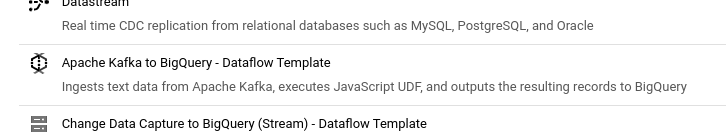

Before diving into coding, it is essential to establish a connection to Apache Spark. Fortunately, this setup is quite simple:

Implementing a Connection to Apache Spark — Image by Author

Furthermore, certain permissions may need to be configured; for comprehensive guidance, please consult the official documentation:

Work with stored procedures for Apache Spark | BigQuery | Google Cloud

Preview This feature is covered by the Pre-GA Offerings Terms of the Google Cloud Terms of Service. Pre-GA features…

cloud.google.com

Once the connections are set up, users can begin coding and create stored procedures utilizing Apache Spark. Here’s a sample blueprint:

CREATE PROCEDURE YOUR_PROJECT_ID.YOUR_DATASET.PROCEDURE_NAME(PROCEDURE_ARGUMENT)

WITH CONNECTION CONNECTION_NAME

OPTIONS (

engine="SPARK",

main_file_uri=["URI"]);

LANGUAGE PYTHON [AS PYSPARK_CODE]

Apache Spark is well-suited for a multitude of applications within the Big Data landscape due to its speed and versatility in handling large datasets from various origins. This initiative by Google is indeed a logical step forward. As a well-established Big Data framework, Apache Spark is widely adopted by numerous users and organizations. Consequently, customers leveraging Apache Spark can now also benefit from the capabilities of BigQuery, allowing for a more integrated experience.

Key application areas for Apache Spark include:

- Data Integration

- Ad Hoc Analysis of Big Data

- Machine Learning

These areas align perfectly with the strengths of BigQuery, making this development exciting news for users.

Additionally, Google has introduced several other significant updates in recent weeks:

- Now Available: Multi Statement Transactions in BigQuery

- BigQuery SQL now supports Multi Statement Transactions

- Is Google BigLake the New Challenger to Snowflake, Redshift, and Others?

- How the New Service Facilitates Platform-Independent Data Analysis

- Enhanced Integration of BigQuery and Colab

- Discover how you can now effortlessly use Python within Google BigQuery.

Chapter 2: Exploring Use Cases for BigQuery and Apache Spark

The first video titled "Three Use Cases for BigQuery and Apache Spark" delves into practical applications where these technologies can work together effectively, showcasing their combined capabilities in handling large datasets.

Chapter 3: Comparing GCP BigQuery and Apache Spark

The second video titled "GCP BigQuery vs Apache Spark" provides a comparative analysis of these two powerful tools, highlighting their respective strengths and use cases for data professionals.

Sources and Further Readings

[1] Google, BigQuery Release Notes (2022)

[2] Google, Work with stored procedures for Apache Spark (2022)